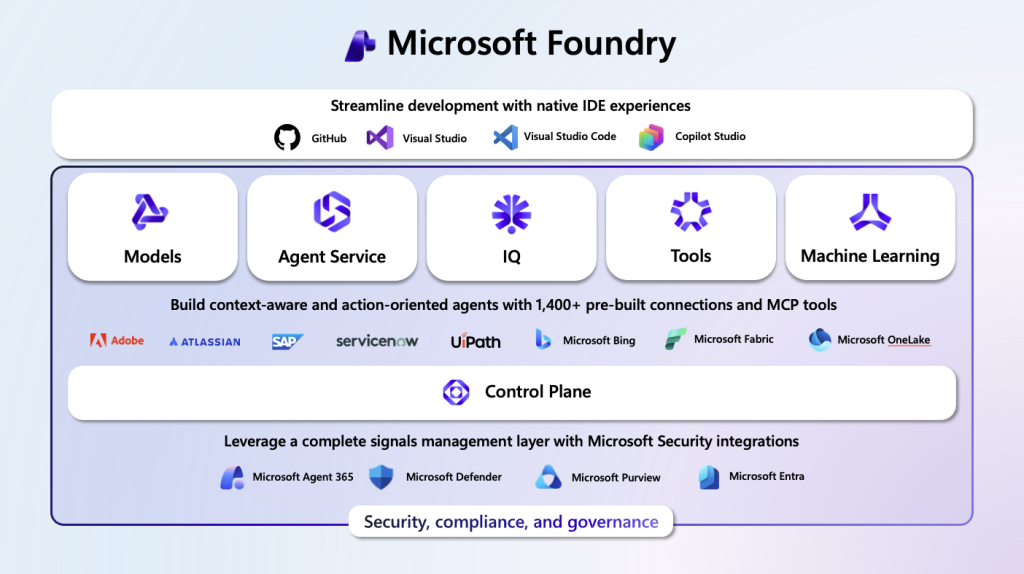

As organisations accelerate adoption of AI, the lifecycle of the underlying models becomes just as important as the applications built on top of them. Microsoft Foundry introduces a unified and scalable way to access, evaluate, deploy, and manage more than 11,000 frontier, domain‑specialised, and open‑source models—all while simplifying governance, performance, and lifecycle management.

In this post, we break down what’s changing, why it matters, and how teams can stay ahead.

Microsoft Foundry brings together models across Azure OpenAI, partner ecosystems, community-driven AI, and Microsoft’s own model catalog. This unified approach helps teams:

- Avoid model lock‑in

- Explore and compare models based on metrics and performance

- Switch models without rewriting code using a unified API

- Scale deployments across serverless, standard, or provisioned throughput

With consistent APIs and deployment tooling, teams can move from prototype to production faster—and with fewer architectural decisions slowing them down.

Understanding the Model Lifecycle

Foundry introduces a clear, predictable lifecycle to guide teams on when and how models should be used:

Lifecycle stages

- Preview — experimental; subject to change

- Generally Available (GA) — stable and production‑ready

- Legacy — announced for upcoming deprecation

- Deprecated — no new deployments allowed, existing ones still run

- Retired — no longer available; deployments stop responding

Azure OpenAI models follow similar rules, with at least 12 months of GA availability, plus an additional six months for existing customers before retirement.

Built‑In Tools for Smarter Model Selection

Foundry makes choosing the right model far more transparent:

- Benchmarks and leaderboards

- Automated evaluations

- A/B experimentation

- Model Router – automatically chooses the best model based on quality, latency, or cost

- Real‑time performance monitoring

Instead of manually comparing dozens of models, developers can rely on standardized metrics—and even automate routing decisions.

Deployment Options: Standard, Provisioned, or Batch

Choosing how you deploy matters as much as which model you choose:

- Standard – on-demand, flexible, cost‑effective for low/medium scale

- Provisioned Throughput (PTU) – high‑volume, consistent performance, predictable cost

- Batch – low‑cost bulk processing of large data jobs

Each option supports different usage patterns—from testing to mission‑critical workloads.

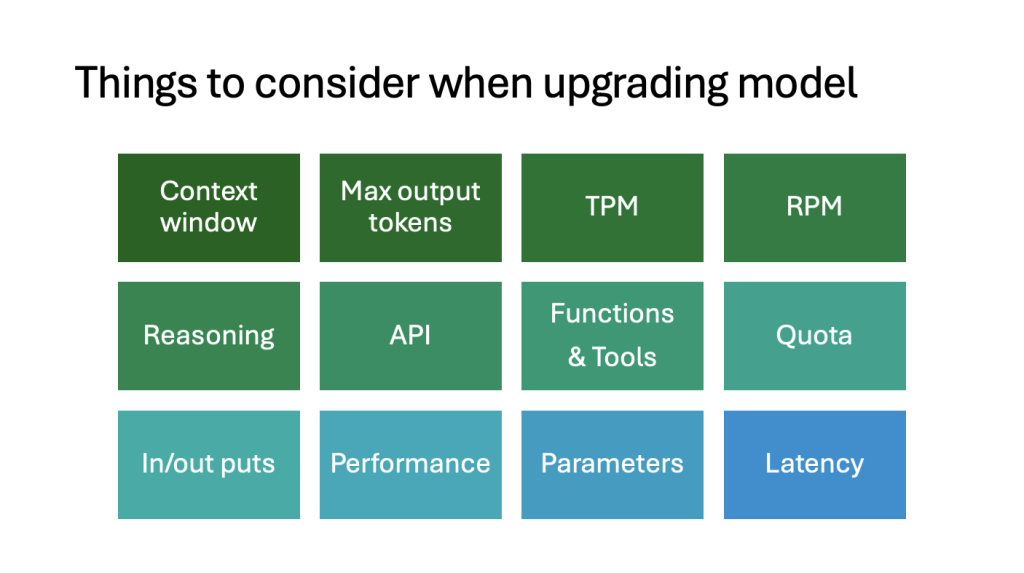

Model Upgrades, Notifications & Auto‑Updates

Foundry provides multiple layers of protection against unexpected model changes:

- Automatic upgrades (optional)

- Explicit version pinning

- Retirement notifications to subscription owners and readers

- 30–90 day advance notice, depending on model type

- Two‑week auto‑update windows for deployments set to default updates

Teams gain full visibility into upcoming model changes—allowing safer production operations.

Preparing for Model Deprecations

To stay future‑proof:

- Test new versions early

- Monitor Foundry notifications

- Use A/B testing before switching

- Review fine‑tuned model schedules, which follow a different retirement lifecycle

- Avoid using Preview models in production

The platform also supports in‑place and multi‑deployment migrations, making transitions more controlled.

Why This Matters

AI models evolve quickly—and so must the systems that support them.

The new Microsoft Foundry lifecycle ensures:

- Predictable planning

- Greater transparency

- Lower operational risk

- Faster innovation at scale

- Compliance‑friendly governance

As AI adoption grows across industries, this lifecycle guidance gives organizations the confidence to build AI solutions that stay reliable—not just today, but long into the future.